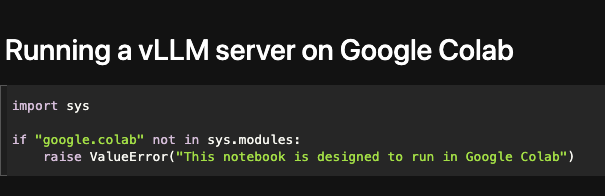

vLLM on Google Colab

Motivation

vLLM is a popular library for fast LLM serving.

I needed to test my code with vLLM but didn’t have access to the actual server. I couldn’t run in locally with a GPU either (Apple Silicon is not yet supported), so I set up a vLLM server on Google Colab, and used LiteLLM to access it from my computer

This gist shows how it can be done.